Mastering Responsible AI: Frameworks and Regulations

Why Responsible AI is important for Today's Business Leaders

🌟 The pace at which the technological aspect of AI is advancing is far ahead of the progress in Responsible AI. 🤖 We need to work together to enhance awareness and implementation of Responsible AI practices. 🌍

In this article, we will discuss “Why you need to learn Responsible AI”, the government's AI act impact timelines, the different landscapes of Generative AI solutions Risk and solutions, and the available framework of Responsible AI implementation.

Why you need to learn Responsible AI and its proposed frameworks

1. Compliance with government guidelines

Governments have taken the required action and produced the AI Act and guidelines. Future AI-based solutions and services need to compliance the AI acts.

AI Act Details and Timeline

- The European Union’s AI Act, adopted in March 2024 and approved by the Council in May 2024, emphasizes high-risk AI applications and mandates transparency for general-purpose AI models.

- U.S. businesses, especially those operating in the EU, must prepare to comply with these regulations to avoid significant penalties.

- On October 30, 2023, President Biden signed a groundbreaking executive order aimed at ensuring the safe, secure, and trustworthy development and use of artificial intelligence (AI). This executive order represents one of the most significant actions taken by any government globally to address the complexities and risks associated with AI.

Introduction to the EU AI Act: The EU AI Act is the world’s first comprehensive regulation on artificial intelligence, aimed at ensuring safe and responsible use of AI technologies across the EU. 🤖

Regulatory Framework: Proposed by the European Commission in April 2021, the Act classifies AI systems based on the risk they pose, with varying levels of regulation depending on the risk category.

Safety and Transparency: The Parliament emphasizes the need for AI systems to be safe, transparent, traceable, non-discriminatory, and environmentally friendly, with human oversight to prevent harmful outcomes.

Risk Categories

Unacceptable Risk: These AI systems will be banned, including Cognitive manipulation of vulnerable groups (e.g., dangerous voice-activated toys). Social scoring is based on behavior or socio-economic status. Biometric identification systems, including real-time facial recognition, with limited exceptions for law enforcement.

High Risk: AI systems affecting safety or fundamental rights will be categorized and require registration in an EU database.

Areas include:

1. Critical infrastructure management

2. Education and vocational training

3. Law enforcement

4. Migration and asylum management

Transparency for Generative AI: Generative AI, like ChatGPT, must comply with transparency requirements, such as disclosing AI-generated content and preventing illegal outputs. High-impact models will undergo thorough evaluations.

Supporting Innovation: The Act aims to foster innovation by providing start-ups and SMEs with testing environments that simulate real-world conditions before public release.

Implementation Timeline: The Act was adopted in March 2024 and approved by the Council in May 2024. It will be fully applicable 24 months after entry into force, but some parts will be applicable sooner.

key timelines Ban on unacceptable risk AI systems: 6 months post-enforcement Codes of practice: 9 months post-enforcement Transparency rules for general-purpose AI: 12 months post-enforcement High-risk systems compliance: 36 months post-enforcement This regulatory framework aims to balance innovation with safety and ethical considerations in the rapidly evolving AI landscape.

2. To Save us from 3rd AI Winter

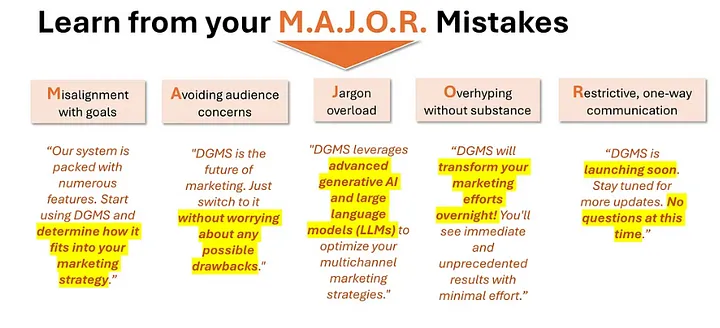

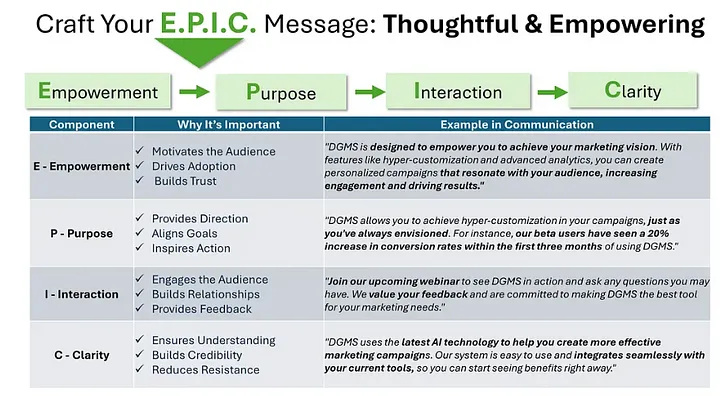

Poor communication and understanding of AI can lead to public distrust and potential stagnation in AI development, known as AI winter. Effective communication and responsible practices can prevent this.

Author: Gautam Roy

Proposed the EPIC framework to promote responsible communication.

Author: Gautam Roy

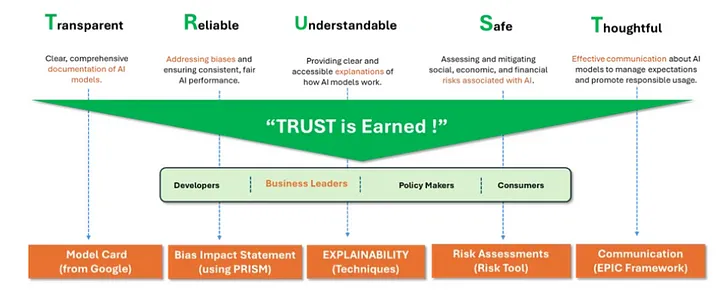

Responsible AI Frameworks

These frameworks guide the development and deployment of AI systems to avoid bias, ensure fairness, and maintain transparency.

Learning and implementing these frameworks help build trust and minimize risks associated with AI applications.

Trust Framework

Author: Gautam Roy