Building a Foundation Model for Personalized Recommendations

Overview

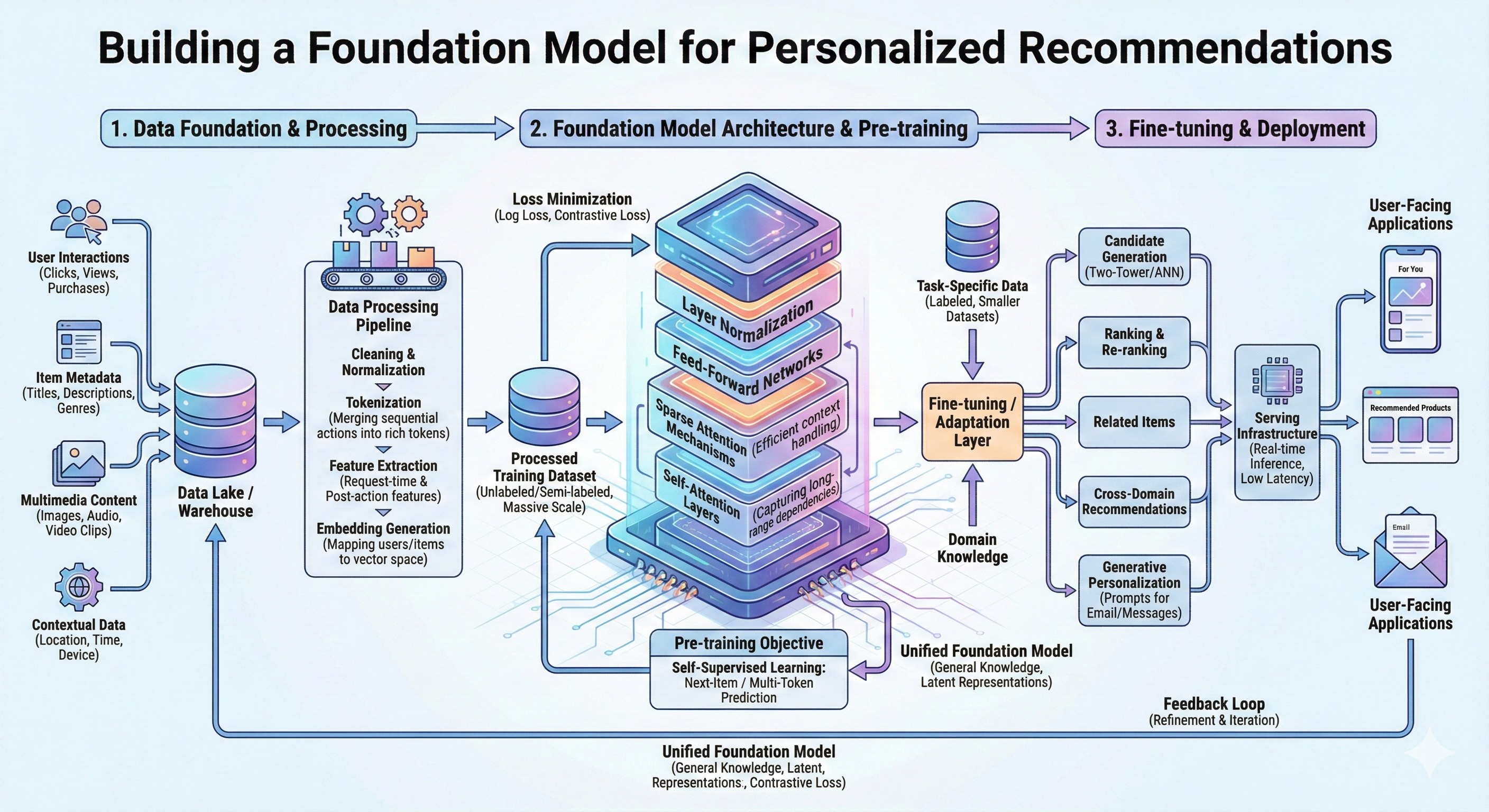

Modern recommendation systems face a hidden complexity. As products grow, personalization grows with them. Over time, teams end up maintaining dozens of specialized models — one for “continue watching,” another for “top picks,” another for ranking, another for candidate generation. Each works well individually, but together they create a fragmented system that is hard to maintain, expensive to scale, and difficult to innovate on.

This case study explains how a large streaming platform rethought personalization by building a single foundation model for recommendations — inspired by how large language models (LLMs) replaced many smaller NLP models. Instead of training multiple task-specific models, the goal became simple: learn user preferences once, then reuse that intelligence everywhere.

The Problem with Many Small Models

For years, recommendation systems evolved incrementally. Each new product feature required:

- A new dataset

- A new training pipeline

- A new model

- Separate maintenance

While effective short-term, this approach created several challenges:

- High engineering and training costs

- Repeated feature engineering across models

- Hard to transfer improvements between systems

- Limited learning from long-term user history

- Short context windows due to latency constraints

The system worked, but it didn’t scale elegantly.

The team needed a centralized, scalable architecture.

The Core Idea: A Recommendation Foundation Model

Inspired by LLMs, the team adopted a new philosophy:

Train one large model on massive interaction data, then reuse it across tasks.

Instead of building 10–20 specialized recommenders, they built:

- One large sequential model

- Trained on full user histories

- Using next-event prediction

- Producing reusable embeddings

This model becomes the “brain,” and downstream systems simply consume its outputs.

Data as the Foundation

Like language models train on tokens, recommendation models train on user interactions.

But raw clicks aren’t meaningful. They must be structured.

Interaction Tokenization

User actions are converted into tokens such as:

- Play

- Pause

- Watch duration

- Device type

- Time

- Content metadata

Multiple small actions are merged into meaningful units (e.g., a full movie watch instead of dozens of play/pause events).

Why? Because too granular data increases sequence length, while too coarse loses signals. Tokenization balances both.

Modeling Long Histories

Active users may have thousands of interactions. Standard transformers struggle with this scale, especially with strict millisecond latency requirements.

Two smart solutions were introduced:

Sparse Attention

Reduces computation so longer histories can fit in memory.

Sliding Window Sampling

Trains on different overlapping segments of history over time.

Why? To learn long-term preferences without exploding compute cost.

What Each Token Contains

Unlike text tokens, interaction tokens are rich and multi-dimensional.

Each token may include:

- Item ID

- Genre

- Device

- Watch duration

- Time of day

- Locale

- Request context

These are embedded directly for end-to-end learning.

Why? Because recommendations depend on context, not just “what” but also “when, how, and where.”

Training Objective

The model uses autoregressive next-token prediction, similar to GPT.

But recommendations require tweaks.

Modifications

- Important actions (full movie watch) weighted higher than minor ones

- Multi-token prediction to capture long-term satisfaction

- Auxiliary targets (genres, language) for better learning

Why? Because predicting only the next click can be short-sighted. The system should learn long-term taste.

Unique Challenges in Recommendations

Cold Start Problem

New titles have no interaction data.

To handle this:

- Combine learnable ID embeddings + metadata embeddings

- Use genres, storyline, tags

- Let metadata guide early predictions

Why? So new content can be recommended immediately.

Incremental Training

Retraining from scratch is expensive.

Solution:

- Warm start from previous model

- Add embeddings only for new entities

Why? Faster updates without losing knowledge.

How It’s Used Downstream

The foundation model supports multiple applications:

Direct Prediction

Used directly for next-item recommendations.

Embeddings

Generates user and item embeddings for:

- Ranking

- Retrieval

- Similarity search

- Personalization

Fine-Tuning

Smaller teams can fine-tune the base model for specific tasks.

Why? Reuse reduces duplication and accelerates innovation.

Results & Impact

Moving to a foundation approach delivered:

- Fewer specialized models

- Lower maintenance cost

- Better knowledge transfer

- Stronger long-term preference modeling

- Higher recommendation quality

- More scalable training

Performance consistently improved as:

- Model size increased

- Data scale increased

- Context window increased

Just like scaling laws in LLMs.

Key Takeaways

What Worked

- Centralizing preference learning

- Treating interactions like tokens

- Using transformer-based sequence modeling

- Leveraging semi-supervised next-event prediction

- Reusing embeddings across systems

Why It Matters

Because personalization is a sequence understanding problem, not just a ranking problem.

Understanding long-term behavior unlocks better recommendations than isolated predictions.

Conclusion

This case study highlights a major architectural shift:

From Many small, task-specific models

To One large foundation model powering everything

By learning from massive interaction histories and sharing that knowledge across tasks, recommendation systems become simpler, smarter, and more scalable.

Just like LLMs transformed NLP, foundation models are now transforming personalization — turning fragmented pipelines into unified intelligence.

And in the world of recommendations, that shift makes all the difference.

From Conversational Data to Real-World AI Impact

At ElevateTrust.ai, we build AI systems that go far beyond dashboards, demos, and proofs of concept — into production-grade, business-critical deployments.

We help organizations turn AI vision into execution through:

- AI-powered Video Analytics & Computer Vision

- Edge AI, Cloud, and On-Prem deployments

- Custom detection models tailored to industry-specific needs

From attendance automation and workplace safety to intelligent surveillance and monitoring, our solutions are designed to operate reliably in real-world environments — where accuracy, latency, and trust truly matter.

Just as conversational AI agents are transforming how teams interact with data, we focus on building AI systems that understand context, scale confidently, and deliver measurable business outcomes.

Book a free consultation or DM to get started https://elevatetrust.ai

Let’s build AI that doesn’t just watch — it understands.